AlphaGo!

http://c.brightcove.com/services/viewer/federated_f9?isVid=1&isUI=1

When you play any games, probably you have strategies or experiences. But you could not deny that some times you need luck, which data scientists would say a “random choice”. Monte Carlo Method provides only an approximate optimizer, thus giving you the luck to win a game.

Randomized Algorithms

Monte Carlo: more samples we have, more approximate the optimizer we get.

Las Vegas Algorithm: more samples we have, more chances we have to get the optimizer.

Depends on the problem, to choose a better algorithm. If samples are finite, and a solution is expected but not the best, MCTS is okay. If the best solution is wanted, then LVA is to be applied with no restrictions on the samples ( you know if with a bad luck you would probably sample EVERY record). In terms of Go, with limitation of computational time and stack space, and not THE BEST is wanted, so MCTS is the right choice.

Monte Carlo Method

Monte Carlo Method, designed to calculate the calculus via random sampling.

You are given a function , and want to know

:

We sample

from the range

based on a distribution function

. Then

could be treated as a function

times a probability density function

.

Then I becomes the average of the distribution of on

. Let’s sample x1,x2,… from

. For each i, we have:

Such that,

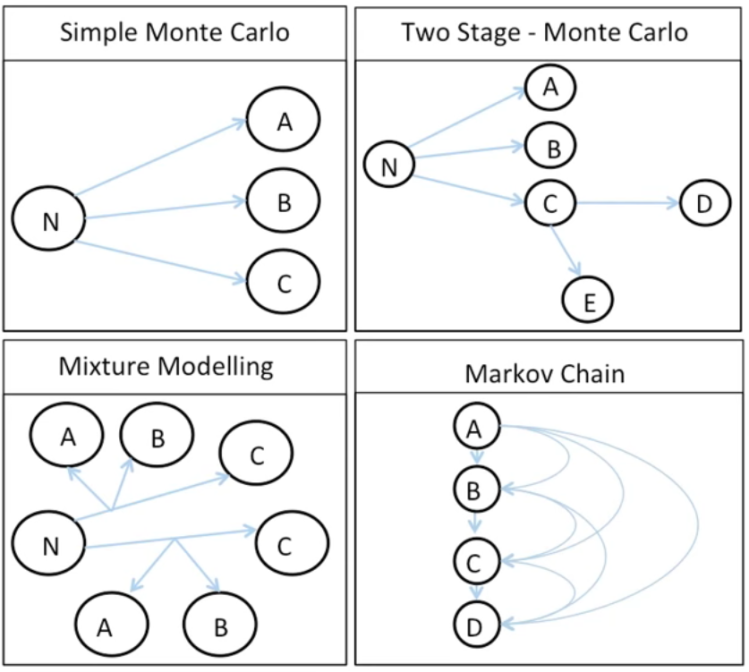

Four types of MC Methods

The edges are probabilities of choosing at each stage.

Mixture Modeling: Use Non-linear Probabilities.

Markov Chain Monte Carlo( MCMC): with a given distribution, we have algorithms to generate unbiased samples easily. But for any not-specified distributions, we choose MCMC as the method.

MCTS

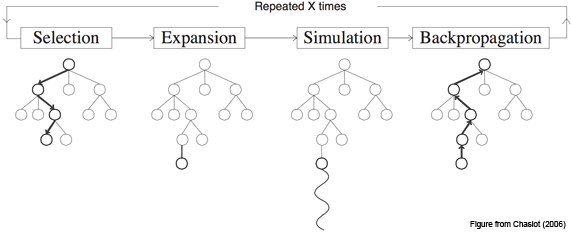

(1)Selection

Starting from the root node R, recursively select optimal child nodes. We want to expand a new node (aka to see all the children of the node unti we reach a leaf) T.

(2)Expansion

From the current node T, expand a child node if T is not terminating the whole game. (From the current situation, make a choice and reach a child.)

(3)Simulation

Starting from node T, black and white play in turns randomly. Eventually get a result of the game, win or lose. Update a winning rate of node T.

(4)Backpropagation

When simulation at node T finish, The parant node and all the ancesstor nodes update winning rate ( from down to the top) until reaches the root R. The winning rate at a node equals to an average of that of children nodes.

Then starts from step1 again. New nodes are added, and more accurate winning rates are calculated. Eventually pick up a max winning rate at one stage.

Tree search method is always a good way of providing dicisions. MCT is an asymmetric tree, and with MC method, it is an aheuristic algorithm (no requirements for experts in a certain domain to make resonable decisions).

But MCTS could take a number of iterations to converge to a good solution, making it an issue of large costs on both time and memory.

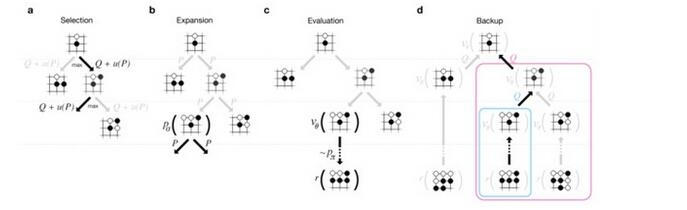

In the AlphaGo:

References:

http://jeffbradberry.com/posts/2015/09/intro-to-monte-carlo-tree-search/

http://mcts.ai/about/index.html

Click to access nature16961.pdf

https://www.zhihu.com/question/39916945/answer/83799720(Chinese)

Thanks for sharing. Expect next explanation on AlphaGo.

LikeLiked by 1 person

Thanks lol 🙂

LikeLike