A brief introduction on Word2vec please check this post. In this post, we try to load pre-trained Word2vec model, which is a huge file contains all the word vectors trained on huge corpora.

Download

Download here .I downloaded the GloVe one, the vocabulary size is 4 million, dimension is 50. It is a smaller one trained on a “global” corpus (from wikipedia). There are models trained on Twitter as well in the page.

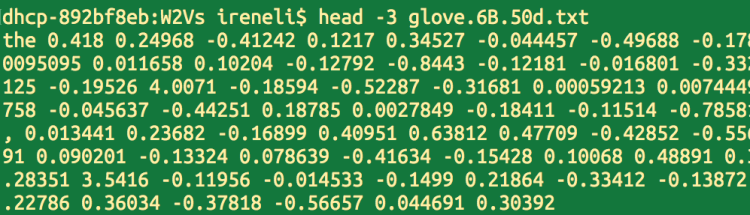

The model is formatted as (word vector) in each line, separated by a space. Below shows a screenshot: not only the words, but also some marks like comma are included in the model.

Loading

There is an easy way for you to load the model by reading the vector file. Here I separate the words and vectors, because the words will be fed into vocabulary.

import numpy as np

filename = 'glove.6B.50d.txt'

def loadGloVe(filename):

vocab = []

embd = []

file = open(filename,'r')

for line in file.readlines():

row = line.strip().split(' ')

vocab.append(row[0])

embd.append(row[1:])

print('Loaded GloVe!')

file.close()

return vocab,embd

vocab,embd = loadGloVe(filename)

vocab_size = len(vocab)

embedding_dim = len(embd[0])

embedding = np.asarray(embd)

The vocab is a list of words or marks. The embedding is the huge 2-d array with all the word vectors. We initialize the embedding size to be the number of column of the embedding array.

Embedding Layer

After loading in the vectors, we need to use them to initialize W of the embedding layer in your network.

W = tf.Variable(tf.constant(0.0, shape=[vocab_size, embedding_dim]),

trainable=False, name="W")

embedding_placeholder = tf.placeholder(tf.float32, [vocab_size, embedding_dim])

embedding_init = W.assign(embedding_placeholder)

Here W is first built as Variables, but initialized by constant zeros. Be careful with the shape: [vocab_size, embedding_dim], where we can know after loading the model. If trainable is set to be False, it would not be updated during training. Change to True for a trainable setup. Then an embedding_placeholder is set up to receive the real values (fed from the feed_dict in sess.run()), and at last W is assigned.

After creating a session and initialize global variables, run the embedding_init operation by feeding in the 2-D array embedding.

sess.run(embedding_init, feed_dict={embedding_placeholder: embedding})

Vocabulary

Suppose you have raw documents, the first thing you need to do is to build a vocabulary, which will map each word into an id. TensorFlow process the following code to lookup embeddings:

tf.nn.embedding_lookup(W, input_x)

where W is the huge embedding matrix, input_x is a tensor with ids. In another word, it will lookup embeddings by given Ids.

So we would choose the pre-trained model when we build the vocabulary: word-id maps.

from tensorflow.contrib import learn #init vocab processor vocab_processor = learn.preprocessing.VocabularyProcessor(max_document_length) #fit the vocab from glove pretrain = vocab_processor.fit(vocab) #transform inputs x = np.array(list(vocab_processor.transform(your_raw_input)))

First init the vocab processor by passing in a max_document_length, in default, shorter sentences would be padded by zeros. Then we fit the processor by the vocab list to build the word-id maps. Finally, use the processor to transform from real raw documents.

Now you are ready to train your own network with pre-trained word vectors!

can you provide the full source code of this tutorial please?

LikeLike

Oh, sorry they are related to my current research. As I am still trying to improve, so I hope would upload to Github soon 😀

LikeLike

I was facing same issue so here is detailed python notebook tutorial on Word embedding in Tensorflow . check out if you like https://github.com/monk1337/word_embedding-in-tensorflow/blob/master/Use%20Pre-trained%20word_embedding%20in%20Tensorflow.ipynb

LikeLike

I think u don’t see the source code of VocabularyProcessor seriously. The id of the vocabulary is initialized with an ‘UNK’, whose id is 0. u can see it from https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/learn/python/learn/preprocessing/categorical_vocabulary.py

. so the id ur code return will be 1 bigger than the correct one.

LikeLike

Hi Shilei, thanks for the reply. But I did check my model, by using “list(model.vocab.keys())”, so it gives you a list of words that are in your vocabulary. Then for each word, you could apply “model[word]” to get access the word embeddings.

Thanks.

LikeLike

It seems that the gensim lib excluding the “UNK” thing (in my previous reply). I will check the TF one, thanks for your reminder 😀

LikeLike

I agree you , I think he can add vocab[0] = [0]*emb_size

LikeLiked by 1 person

After applying ” pretrain = vocab_processor.fit(vocab) ”

The number of vocabulary in vocab_processor compare with vocab

len(vocab_processor.vocabulary_) == 2762098

len(vocab) == 3000000

Is there any similar situation happened? Thank you

LikeLiked by 1 person

There is a reply:

“Simpson Family

I think that the problem is the vocab_processor doesn’t collect the quotation mark and I am still thinking about how to deal with this issue, because this issue may cause the mistake when using the method embedding_lookup…

”

I did not have the same issue, did you have it solved?

Thanks.

LikeLike

just a small detail: you haven’t included the following line in your code :

> import numpy as np

and i think it might be confusing to people who haven’t directly worked with np before. although this i unlikely to cause problems as ppl who are working in the field of deep learning have probably worked with numpy too, but just wanted to mention it. maybe it will save the day for someone 😀

LikeLike

Hi ahmad, thanks for the suggestion. I just updated 😀

Thanks.

LikeLike

Simpson Family

I think that the problem is the vocab_processor doesn’t collect the quotation mark and I am still thinking about how to deal with this issue, because this issue may cause the mistake when using the method embedding_lookup…

LikeLiked by 1 person

I am not sure if this is the reason, did you find out how to solve it?

Thanks.

LikeLike

Hi Irene,

Thanks for writing this post. I found it really helpful.

Could you please elaborate on the form of raw input in the last line ie:

x = np.array(list(vocab_processor.transform(your_raw_input)))

I tried:

x = np.array(list(vocab_processor.transform([‘hello world’])))

And got this result:

array([[12846, 78, 0, 0, 0, 0, 0, 0, 0, 0]], dtype=int64)

But vocab[12846] gives ‘carriage’ and vocab[78] gives ‘government’.

I thought vocab[12846] should give ‘hello’ and vocab[78] should give ‘world’…

Note: I set max_document_length to be 10

LikeLike

Hi there! Sorry I did not work in this area for a very long time. I hope you have solved it?

Thanks.

LikeLike