In our daily life, we always repeating something mentioned before in our dialogue, like the name of people or organizations. “Hi, my name is Pikachu”, “Hi, Pikachu,…” There is a high probability that the word “Pikachu” will not be in the vocabulary extracted from the training data. So in the paper (Incorporating Copying Mechanism in Sequence-to-Sequence Learning), the authors proposed CopyNet which brings copying mechanism to seq2seq models with encoder and decoder structure. Read from my old post to learn the prerequisite knowledge.

Structure

Encoder: a bi-directional RNN is used. We define to to be RNN’s hidden state, and

to be the context vector where

summarizes the hidden states.

Note that we define a concatenation of vectors , as the short-term memory of the word from

to

, where

is the length of a sentence sequence.

is considered to be the new representation of the sentence sequence. We also define

to be decoder state, normally, at the step t, we have

which considers the previous output of decoder, the previous decoder state and the context vector.

Decoder: we would get access to in multiple ways and predict the output within the decoder. We will how decoder works in the rest of the blog post.

Prediction

There are two ways to generate a word, naturally, to copy from the input sentence or to generate from a vocabulary. We define as our vocabulary which contains a list of frequently-used words,

contains a unique collection of words from our input sentence (every sentence we have a different collection) and a special token UNK to represent all other unknown words.

To generate a new word at decoding step t, we consider both generate-mode and copy-mode by simply add the probabilities together.

We define the two types of probabilities bellow:

where is the scoring function. For generating, if the word is in the vocabulary we will generate (the first line), or it is an unknown word (the third line). For copying, we consider the cast that if the word is within the whole input sentence only (the forth line). To be more specific, there are four cases, if the word

:

* is UNK

* is a word from vocabulary only

* is a word from only

* is a word from and vocabulary

at the same time

Now we will define the scoring functions.

Generate-Mode When the word is from vocabulary or UNK, we have:

where is a trainable matrix.

Copy-Mode We consider the case if the word is from the original input sentence:

In equation (6), we should sum up all the situations, because a word might have occurred multiple times in the input sentence.

Update States

We introduced the decoder states in a generic attention-based Seq2seq model. In CopyNet, we are making a change in how to represent

. we will consider more. In the paper, they define it to be

where the first term is the embedding of the word

, and the second one is a weighted sum of

:

The authors called the as selective read. The word might exist in multiple positions, so we sum them up in a weighted way. Here, we use

as a way of normalization, making

a probability distribution, the same thing happened in equation (5) and (6)

.

Experiments

Synthetic dataset In the paper they used some rules to construct a dataset of simple copying data, for example:

abcdxef-> cdxg

abydxef->xdig

Compared with the Enc-Dec and RNNSearch model, the CopyNet can have a competitive accuracy. (More details please check their paper.)

Summarization

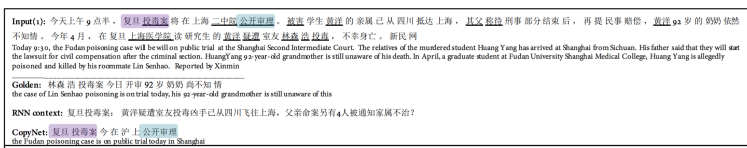

An example result shows bellow.

The Chinese parts give the tokenization and the underline words are OOV. The highlighted words are the copied ones (where the probabilities are higher than generate-mode probabilities).

So while computing score_g (generate mode) vocabulary considered is vocabulary from entire training data or only the current batch

We need to create embedding for both oov word and vocab word right? because we give ids from word2index mapping as an input to the encoder and thus we create an embedding layer of size vocab_size X emd_size where vocab_size is for the entire training data. the paper uses the term instance vocabulary but I am not sure which vocab_size is used for computing score_g and score_c.

LikeLike

Replied your email just now….

LikeLike